A brief history of what kicked off the controversy. Using IQ tests to screen employees is mistakenly thought to be illegal; the origin of this myth comes from a Supreme Court case of a company that used IQ tests to screen employees into four of its departments:

In the 1950s, Duke Power's Dan River Steam Station in North Carolina had a policy restricting black employees to its "Labor" department, where the highest-paying position paid less than the lowest-paying position in the four other departments. In 1955, the company added the requirement of a high school diploma for employment in any department other than Labor, and offered to pay two-thirds of the high-school training tuition for employees without a diploma.[3]

On July 2, 1965, the day the Civil Rights Act of 1964 took effect, Duke Power added two employment tests, which would allow employees without high-school diplomas to transfer to higher-paying departments. The Bennett Mechanical Comprehension Test was a test of mechanical aptitude, and the Wonderlic Cognitive Ability Test was an IQ test measuring general intelligence.

This eventually resulted in a lawsuit that was taken to the Supreme Court, who ruled that “Broad aptitude tests used in hiring practices that disparately impact ethnic minorities must be reasonably related to the job”.

While this didn’t actually make IQ testing for employment illegal, it did discourage doing it, and that prompted people to study the association between intelligence and job performance.

A meta-analysis of the studies on the topic was done in 1984, which found a mean correlation between IQ and job performance of 0.53. This meta-analysis and others have been scrutinized and debated in the scientific literature. Of note are two articcles: one rebuttal coming from Richardson and Norgate (R&N) who argued the correlation from the Hunter & Schmidt meta is inflated, and a defense coming from Zimmer and Kirkegaard (K&Z) who argue that the true correlation is between 0.5 - 0.6. From what I have read in each paper, there are the main points of contention:

Publication bias: neither of these studies discussed this, but priors dictate this is should be an issue.

Correction for reliability of IQ: R&N allege that correcting for unreliability is not a good idea — as IQ tests are not perfectly reliable, correcting for this would inflate their utility in predicting job performance in the real world. I think this criticism is appropriate.

Correction for reliability of job performance: R&N argue that the intra-rater reliability (reliability within raters) should be used to adjust for unreliability instead of the inter-rater reliability (reliability between raters) because raters may value different traits within employees. I find this unconvincing (and retarded, frankly), the purpose of correcting for inter-rater reliability is that people do not perfectly assess talent and productivity of employees perfectly.

The magnitude of the inter-rater reliability: Z&K note that the original Hunter & Schmidt 1984 meta assumed a reliability of 0.6, later they published a meta-analysis which suggested that it was actually a bit lower: 0.52, so the true correlation would have to be adjusted upwards.

Decreasing correlation with time: R&N argue that the correlation between job performance has decreased with time. While this could be possible, personally I don’t trust these authors. Time trend statistics are extremely easy to p-hack using moderators and methodological decisions.

Restriction of range: R&N argue that correcting for restriction of range after averaging the correlations is improper, and should be done before this correction. This is not correct, as applying this correction within studies introduces variance between effect sizes due to imprecise measurements of restriction of range.

Construct validity of IQ tests: R&N wrote that IQ tests have “poor construct validity” - Z&K argue that they do, and that they have high levels of predictive validity: 0.6 - 0.93 for general gaming ability, and even tests that were designed to measure independent abilities still have a substantial g-factor. Construct validity refers to a test’s ability to measure what it intends to measure (intelligence), not it’s ability to predict other tests (predictive validity) or whether different parts of the test intercorrelate (consistency/reliability). Regardless, this is a pointless thing to argue about; what matters is the magnitude of the correlation between IQ and job performance, not the mechanism by which it arises.

Noncausality: both Z&K and R&N go on tangents arguing about the causal nature of the correlation between job performance and IQ. This should be investigated for academic purposes, but what really matters is if selecting for IQ works.

It’s generally better to use the best studies (large samples, wide range of jobs, corrections for reliability/RoR within sample) from the literature to estimate the true correlation instead of one that uses all of the published literature.

One of these best studies was recently published:

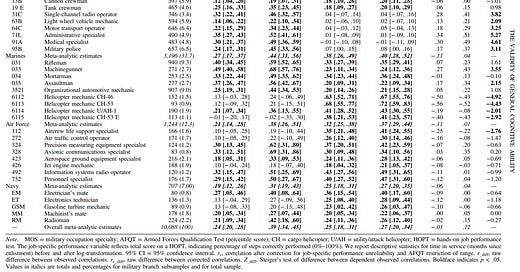

Decades of research in industrial–organizational psychology have established that measures of general cognitive ability (g) consistently and positively predict job-specific performance to a statistically and practically significant degree across jobs. But is the validity of g stable across different levels of job experience? The present study addresses this question using historical large-scale data across 31 diverse military occupations from the Joint-Service Job Performance Measurement/Enlistment Standards Project (N = 10,088). Across all jobs, results of our meta-analysis find near-zero interactions between Armed Forces Qualification Test score (a composite of math and verbal scores) and time in service when predicting job-specific performance. This finding supports the validity of g for predicting job-specific performance even with increasing job experience and provides no evidence for diminishing validity of g. We discuss the theoretical and practical implications of these findings, along with directions for personnel selection research and practice.

While they don’t cite the correlation in the abstract, the correlation adjusted for restriction of range and the reliability of work samples/supervisory ratings is .39.

While this is a military only sample, the range of jobs is extremely diverse, so that should not result in problems with generalization.

Besides this study, there is similar study that uses a large (n = 4,039) military sample.

A predictor battery of cognitive ability, perceptual-psychomotor ability, temperament/personality, interest, and job outcome preference measures was administered to enlisted soldiers in nine Army jobs. These measures were summarized in terms of 24 composite scores. The relationships between the predictor composite scores and five components of job performance were analyzed. Scores from the cognitive and perceptual-psychomotor ability tests provided the best prediction of job-specific and general task proficiency, while the temperament/personality composites were the best predictors of giving extra effort, supporting peers, and exhibiting personal discipline. Composite scores derived from the interest inventory were correlated more highly with task proficiency than with demonstrating effort and peer support. In particular, vocational interests were among the best predictors of task proficiency in combat jobs. The results suggest that the Army can improve the prediction of job performance by adding non-cognitive predictors to its present battery of predictor tests.

They found a correlation of .63 between technical proficiency and cognitive ability between jobs.

I have no idea why this is so far away from the correlation of .39 that the prior study found. For what it’s worth, the correlation of .51 that Hunter and Schmidt found was probably accurate.

To measure the validity of IQ tests when predicting job performance in the real world, it would be best to uncorrect for the reliability of IQ. This would change the figures from both studies to .37 and .60 respectively, assuming a reliability of IQ of .90. Not much of a change, really.

There is the issue of the reliability of IQ but also of the reliability of job performance. Logically, the calculated correlation understates the real one between intelligence and job performance. Another point is that high intelligence seems to have very large externalities (the income externalities are much higher than the benefits captured the intelligent individual himself according to Garret Jones). It seems reasonable to assume that hiring smarter people, especially in management positions, has large benefits for an organization beyond the individual measurable contribution of the concerned employees. The corollary is that affirmative action, especially for important managerial positions, has a very significant cost.