I.

Many people uncritically assume that matrix reasoning is a good test of intelligence, when in reality it plays third fiddle to tests of vocabulary and arithmetic. I’m not sure why this is, but I suspect it is because:

Linguistic Invariance: there is no linguistic requirement needed to take them, making them convenient comparisons across countries.

Subconscious association: tests of mathematics/verbal ability are not specific to psychology and are commonly found in educational institutions. On the other hand, ravens-style tests are only used to test IQ. This leads to people to subconsciously associate IQ testing with matrix reasoning more than other subtests.

There is no concrete evidence that either of these theories are true — but I do know that people highly value matrix reasoning as an IQ test despite the fact it poorly tests intelligence. While matrix reasoning tests are listed in the ‘Comprehensive Online Resources’ of r/CognitiveTesting, no individual test of mathematical and verbal ability is. Also, it’s also one of the most searched for IQ tests on wikipedia, indicating that it is highly notable.

II.

These are the 3 aspects by which an IQ test should be evaluated:

g-loading: because g is the most predictive part of the IQ test, tests that correlate more with it are better.

Reliability: if a measurement is good, it is better for it to measure itself well.

To determine how g-loaded matrix reasoning is in comparison to other good, but not comprehensive tests of IQ, I decided to compare matrix reasoning to arithmetic reasoning and vocabulary in their g-loadings in factor-analytic studies. I relied on google search, google scholar, some material from my old blog, and some data emil compiled for sources. I found that the most g-loaded subtest was vocabulary (0.75), followed by arithmetic (0.7), and then matrix reasoning (0.69). In comparison, the SAT has a g-loading of about 0.8-0.86 based on the available evidence.

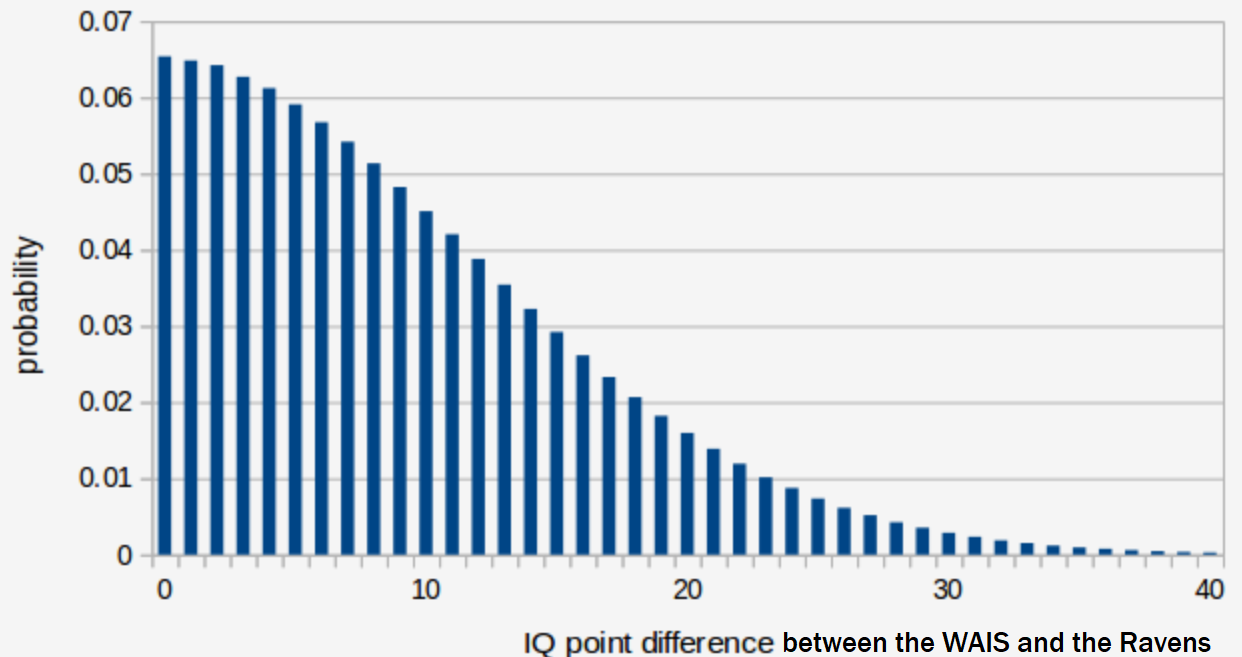

A different meta-analysis found that the raw correlation between the WAIS and ravens tests was 0.67.

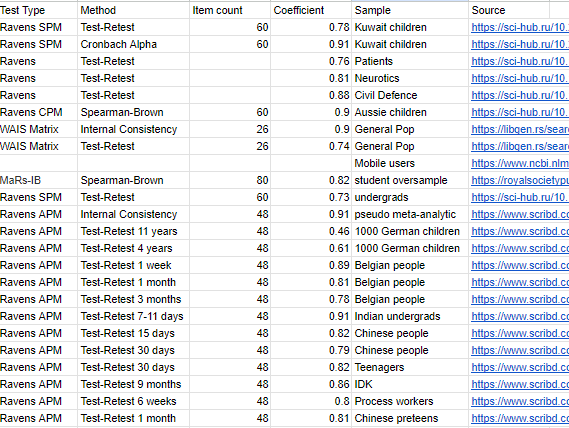

Typically, the reliability of a composite IQ test is about 0.9-0.95 depending on the method used to extract the reliability coefficient. In a similar fashion, I also decided to gather the reliability of Matrix reasoning tests. On average, the internal reliability/consistency is about 0.9, while the test-retest reliability is about 0.8 depending on the interval.

The poor test-retest reliability of the matrix reasoning seems to be shared among the other nonverbal tests in the WAIS. This may be because daily/monthly fluctuations in cognitive abilities may be affecting tests which require intensive thinking more than those that rely on accumulated knowledge.

Last, it is also important for a test to be completed quickly. Different sources claim that the Ravens SPM take between 30 to 60 minutes to complete [1] [2] [3] [4]. In comparison, the WAIS IV takes about 70 minutes to test, meaning that an individual could complete about 6 WAIS subtests in the time it takes to do the whole Ravens test.

Academic literature on the time required to complete an IQ test is surprisingly slim, and all I have managed to find is one study that uses a clinical sample with an average IQ of about 85. This study found that matrix reasoning, arithmetic reasoning, and vocabulary all take about 5-6 minutes to test in the WAIS IV. Personally, I don’t put much stock in it.

There is also a myth that the ravens is a very pure measurement of general intelligence. This is not true. This has been known for quite a long time, since about the mid 20th century, but the myth continues to perpetuate due to unknown reasons. The test itself seems to load on visualspatial ability in addition to general intelligence, according to analysis by Gignac.

III.

In summary, I would advise against the use of Ravens-style tests to test intelligence, unless there are linguistic constraints that make their administration necessary. They are not highly g-loaded, they are relatively unreliable, and take too long to test.

A bigger problem perhaps is how easy it is to practice on, or teach to others. Since subjects differ in exposure to such tests, this decreases the validity, especially in the modern internet age of mass exposure. Online samples probably have even more problems.

MR is only a subtest, and all subtests used by themselves will not make a good IQ test. MR does what it's supposed to do, it has good g-loading (I think I've also seen that fluid reasoning is the only factor that retains its g-loading as you go higher and higher) and good enough reliability. That's all you need to put it in the battery, since you can only adequately measure individual IQ with a large number of questions and several subtests. Using MR alone gives only a rough estimate, and not a very good one, but same will be true for any other subtest.