Optimized anime rankings

Probably not Monster

Normally humans judge media based on a rating out of 10, which is intended as a proxy for the value they ascribe to the work. There are some websites that aggregate ratings of various individuals and rank the anime, such as myanimelist:

There are several reasons why these rankings may not be reliable indicators of the amount of “value” the work holds to the general public:

Sequel bias: most people who watch sequels were already fans of the original work, so their scores are inflated - note that 3 of the top 5 are sequels. Despite that, FMA:B and Steins;Gate have somehow managed to survive the sequel menace and have claimed their places as the top anime.

Rater bias: people who give systematically higher ratings may watch certain anime more than others. For example, more experienced viewers may give lower ratings across the board, which inflates the ratings of anime with high levels of popularity.

Unrecognized value: some people may not be good at recognizing the value that a work has, because it requires a degree of attention or cognitive ability that some members of the audience do not possess.

Signalling: raters may take into consideration that promoting certain anime may change their social status, and may subsequently overrate “high status” anime.

Bad mapping: most people rate titles with a mean of 7 with a standard deviation of about 1.5-2. Subjectively speaking, a ‘10’ is much more distant from a ‘7’ than a ‘4’ in value. Try to think of it this way - a world with 100 anime that are ‘10’s and 100 that are 4s is much better than a world with 200 ‘7’s.

To attempt to correct for some of these biases, I scraped the rankings of 20 individuals who rate anime on myanimelist as well as the mean rating the anime gets on myanimelist. These individuals are mostly well-known anime reviewers, the full list of which is available in the Appendix. This is to correct for some of the bias caused by some people being to stupid to recognize value, as the average IQ of an anime reviewer is (probably) reasonably higher than that of the average viewer. While some of these reviewers no doubt have low IQs, they are still probably better than the general public.

To correct for the rater bias, I determined whether some raters give systemically higher or lower ratings than others using a mixed effects meta-analysis which adjusted for the mean rating each rater gave. The ratings the raters gave were also normalised to avoid biases arising from differences in variance as well. In addition, anime with less than 10 ratings were excluded, as their ratings are not reliable enough - this results in the average rating to have an omega reliability of .93. This could potentially ignore underwatched but great series, but, for what it’s worth, reducing this to 5 ratings only adds a few sequels to the top 10.

Adjusting for the bias caused by mapping is difficult - I opted for averaging the percentage of people who gave an anime a 10 and the means to yield a somewhat more accurate ranking I call the ‘Greatness Index’. Of course, these metrics were scaled to avoid one of them from overtaking the other.

There are also some issues with outliers - I removed ratings more than 3 SD away from the overall mean.

According to this method, the top 20 series are:

Monster

Fullmetal Alchemist: Brotherhood

Cowboy Bebop

Hunter x Hunter (2011)

Legend of the Galactic Heroes

Steins;Gate

Death Note

Gankutsuo

Mushishi

Fate/Zero

Neon Genesis Evangelion

Tengen Toppa Gurren Lagann

Houseki no Kuni

Mahou Shoujo Madoka★Magica

Ghost in the Shell: Stand Alone Complex

Cardcaptor Sakura

Violet Evergarden

Shouwa Genroku Rakugo Shinjuu

Baccano!

Tatami Galaxy

The top 10 movies are:

Perfect Blue

Princess Mononoke

A Silent Voice

Spirited Away

Totoro

Kara no Kyoukai Movie 5: Mujun Rasen

K-On! Movie

Kara no Kyoukai Movie 7: Satsujin Kousatsu (Go)

Kimi no Na wa

Cowboy Bebop: Tengoku no Tobira

The top 5 sequels are:

Fate/Zero: 2nd season

The End of Evangelion (this is a sequel, fight me)

Clannad: After Story

Aria the Origination

Ghost in the Shell: Stand Alone Complex 2nd GIG

Unfortunately, given the reliability of the ranking metric was 0.93 and that there were 307 anime that could potentially have scraped the top 10, the exact top 10 should not be that accurate, and most of the top 10 will probably drop to 10-20 after I do the next revision with 69 judges. Because of that, I do not expect Monster to occupy the “true” top 10 slot.

Foresighted FAQ

Q: Why is X reviewer who I don’t respect added to the rankings?

A: The rankings of all raters I provided load substantially on one factor, indicating that they are measuring the same underlying variable. As long as ratings from two bad reviewers don’t covary, the global ratings will measure roughly the same latent variable.

Q. How well do these correspond to other attempts to make something similar?

A: I know of one guy who tried to make a top 100 most acclaimed anime based on lists he found on the internet. As far as series go, my #1 (monster) is his #27, my #2 is his #8, my #3 is his #2, my #4 is his #21, and my #5 is his #15. The agreement isn’t great, but it’s not terrible.

While it’s rather disingenuous to do this as I used the MAL ratings to calculate my own, my #1 (monster) is rated #25 on MAL, my #2 is rated #1, my #3 is rated #44, my #4 is rated #8, and my #5 is rated #12. However, this list is full of sequels which are incredibly overrated, as it is mostly only the fans rating them.

Apparently there is also a Russian myanimelist clone, and this is where my top 5 are located:

Monster: 52

Fullmetal Alchemist: Brotherhood: 3

Cowboy Bebop: 19

Hunter x Hunter: 37

Legend of the Galactic Heroes: 67

Q: How do ratings of individual anime covary.

Appendix

Here is the list of reviewers that I chose:

ThatAnimeSnob: has a bad reputation for… several reasons, but is a reasonably reliable reviewer, and few people have put the effort into reviewing as many anime as he has.

TheAnimeMan: some aussie youtube content creator. Average reviewer.

Gigguk: some Thai/British youtube content creator. Average reviewer.

Super Eyepatch Wolf: cringe name, but not a terrible reviewer.

Aleczandxr: some rando youtube reviewer

Craftsdwarf: some rando yt reviewer

KevinNyaa: another rando yt reviewer

Scandalous21: that’s me! kind of shady to include yourself in your own list of reviewers, but for what it’s worth, I am not skewing the ratings very much. There are ~20 reviewers in total and I have only watched ~100 anime, in comparison to many here who have watched ~1000 or even ~3000.

ChibiReviews: garbo rando yt reviewer

SeaTactics: rando yt reviewer

OtakuDaikun: rando yt guy

Gwern: yes, that gwern. He reviews anime.

Zeria_: some other yt rando

Archaeon: from the most helpful reviewers on MAL list. His ratings are decent, except for the fact that he gave Evangelion a 5 (lol)

RebelPanda: another MAL reviewer

Stark007: another MAL reviewer. too agreeable for my tastes.

Veronin: another MAL reviewer.

TheLlama: another MAL reviewer. too agreeable, but has some good contrarian takes.

ZephSilver: another MAL reviewer. too disagreeable, but has some good contrarian takes.

Skadi: another MAL reviewer.

MAL rating: the averaged score the general public gives the anime on MAL

Most of them were taken from reddit recommendations or from the anime reviewers who were rated as the most helpful on MAL, which is a reasonable proxy for quality/number of anime seen.

Others that were considered but not entered:

https://myanimelist.net/profile/G-0ff incomplete

https://myanimelist.net/profile/digibro profile is not serious

https://myanimelist.net/profile/BadRespawn too few anime seen

https://myanimelist.net/profile/DEMOLITION_D doesnt rate

https://myanimelist.net/profile/PauseandSelect doesnt rate

https://myanimelist.net/profile/Crabe not sure if it’s the real one

https://myanimelist.net/profile/Karhu doesnt rate

https://anime.plus/Arkada/ratings,anime doesnt rate enough

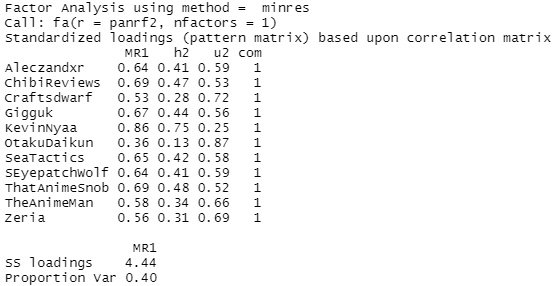

Here are the results of a factor analysis of the ratings of each anime, where the anime are rows and the reviewers are columns:

Because the proportion of variance explained by ‘rating g’ is so high, the reliability of overall ranking within titles that have at least 10 ratings is very high:

Gwern, Crafts, and I had the most inconsistent ratings with the rest of the crowd, while the aggregated MAL rating was the most consistent with the rest of the crowd. I also did a second where I excluded the aggregated MAL rating:

Mostly the same results. From what I can see, reviewers who are more distant from the broader anime community (e.g. me, Gwern) tend to have ratings that are much more distant from the rest of the community. This is probably because these ratings are not independent, given that the reviews that some people make may affect the anime community’s perception of the anime as a whole. I also computed the correlation matrix of all the ratings:

All of the correlations are positive, besides one: gwern’s and Zeria’s ratings correlate at -0.087, partly because gwern gave death note a 10 and Zeria gave it a 2 (???). A little difficult to see, but from what I can tell, the reviewers from the MAL community seem to give suspiciously similar ratings. To test this hypothesis, I subsetted out the MAL reviewers and factor analyzed them independently.

The general factor of ratings seems to explain much more variance now - increasing from 43% to 54%. I also did the same with the youtube reviewers:

43% to 40% - not a big difference. Probably because many of these youtube reviewers are very small and don’t know each other. On the other hand, I deliberately chose the most well known MAL reviewers, who might even know each other in real life!

I was also curious about whether there was a multidimensional rating structure - to facilitate this analysis I removed all anime with less than 15 ratings. The parallel analysis suggested 9 factors/3 components - 3 components personally sounds much more plausible to me.

Most reviewers do not load highly on the 2nd or 3rd components. Most notably, component 2 largely seems to be agreeing with gwern vs agreeing with zeria, and component 3 is agreeing with Aleczandxr and disagreeing with TheAnimeMan.

I was also curious about the most viewed anime within the set of reviewers was - there are definitely some notable ones that punch above their weight in this sample: 5cm per second, the garden of sinners, anohana, and welcome to the NHK.

About the outlier exclusions: I first noticed the outliers were a problem when I was computing the variances for each anime. I noticed that Death Note was considered one of the most controversial anime, which was rather odd, as most people seem to agree it’s good. It turns out that this was heavily influenced by one guy who gave it a 2/10, which dragged down the mean rating and dragged up the standard deviation.

To prevent this, I removed all ratings that differed from the standardized mean by more than 3 standardized units. To analogize: if a group of people consider one person to have an IQ of 145, and one particular person judged their IQ to be 99, their rating would be removed and the rated mean/variances would change accordingly.

Having watched many of the top rated anime in this list, it's a bit hard to take seriously, especially given how low Evangelion's position is.

This is great; I over a decade ago I did something similar with the books in the Dresden Files, but my sample wasn't very good.

There's got to be interesting psychological information to be had from this kind of process. I would have guessed that a person's loading on the first factor of any list of paintings/movies/anime/whatever is reasonably indicative of their IQ, since smarter people are more accurate about things in general. But then Gwern has a fairly low score there, so IDK.