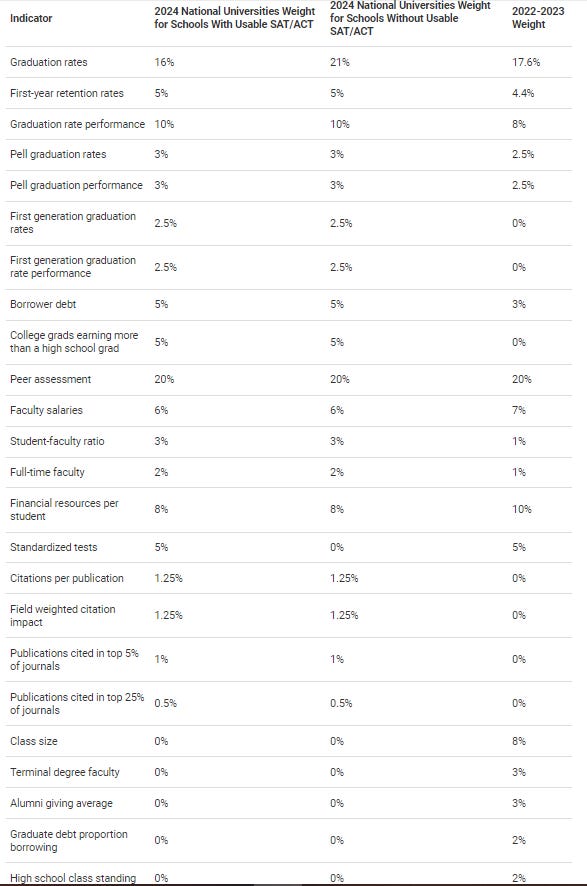

Many people have attempted to create University Rankings, the most notable one being the usnews ranking that gets updated every year. Their methodology is better than I thought it would be, but the weights are a bit wonky.

Ideally, you want to weigh every variable by how well it measures ‘latent university quality’, though it’s not even clear if that is a real thing. Fortunately, this can be determined with factor analysis.

The variables I used as measurements of university quality include:

Student Quality: composite of SAT, ACT, and class rank of students

Graduation Rate: composite variable of graduation rates at 4, 5, and 6 years (note - this correlates with student quality at .87)

Academic Quality: composite of the number of citations, articles, and h-index of each university controlled for graduate student enrollment.

Student-Faculty Ratio: self-explanatory

% of faculty that have PhDs: self-explanatory

% of alumni who donated: self-explanatory

Acceptance Rate: admissions total divided by applicants total

Here are the results of the factor analysis:

Most of the variables have high loadings, but student quality is a notable outlier, a loading of .98, very close to 1. This indicates that whatever is causing all of these variables to covary is almost identical in form to an index of overall ‘student talent’. This could be for several reasons: better students are more likely to graduate, and if those students are employed by the university, they are more likely to manage the university correctly. For what it’s worth, there are reasons some universities are better than others that are not related to how talented the students are — some may have better facilities, better policies, more efficient budgeting, and better food, though these things are hard to measure (and are not included in university ranking measurements).

Those then can attract more applicants, which will mean that the university will have more students or better students. And if that is the case, then the prestige of the university will rise. It’s largely a self-perpetuating cycle.

A thing I must note: it also is not the case that a measurement has to measure a latent variable to be useful. If 100 restaurants are generated that have randomly assigned chefs, facilities, and menus, some of the restaurants will be better than others, but the ways restaurant quality would be measured would be uncorrelated.

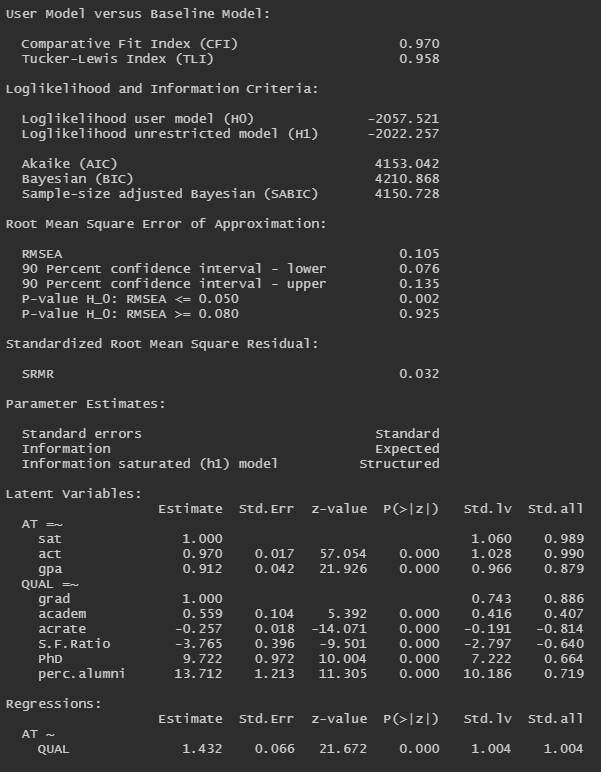

Other methods of calculating the relationship between ‘university quality’ and ‘student quality’ also suggest that the true correlation is very high: somewhere between .91 and 1. Using a SEM to test the latent relationship suggests that general factor of student academic quality and university quality are one and the same, regardless of the estimation method:

Unfortunately, the fit isn’t particularly good for either model (many indicators have loadings of above 2 or below -2), so I tried a different method to estimate the correlation between ‘university quality’ and student quality, which involves calculating university quality without the student quality metrics and calculating the correlation between student quality and uni quality. Even without the student quality metrics, student quality and uni quality correlate at .91.

I also plotted my student quality ratings against the US News ranks:

Datasets:

The first thing I notice is that engineering/technology universities seem particularly lowly ranked for their student quality (including Colorado School of Mines, what a name!)