Who believes AI Will Destroy Humanity?

Young, unhappy, left wing, and thin people.

The new slate star codex survey is out, and they asked people what the probability AI would destroy humanity is. The mean response was 14%, with a median response of 5%. Overall, a fairly reasonable distribution of responses, with a few crazies here and there. Code here.

I used grouping functions and correlation coefficients to determine the zero order associations between variables and percieved AI risk. AIRISK here is measured as the % chance the respondent claims they think AI will destroy humanity by 2100. p-values were assessed with the kruskal test.

Race (yes): p < .01

Middle Easterners and Indians most concerned about AI Risk. Blacks least concerned.

Sex (no): p = .48

Gender (yes): p < .001

Trans women and non-binary most concerned, trans men and cis people least concerned.

Sexual Orientation (yes): p < .001

Bisexuals and other divergents most concerned, Hetero/homosexuals least concerned.

Age (yes): p < .001, (r = -.129)

IQ (no): p =.46, r = .02 (note: the average is 140. Very selected sample.)

SAT scores (yes): p = .013, r = .05 (note: the average is 1485. Very selected sample.)

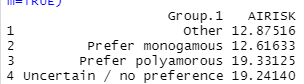

Relationship style (yes): p < .001

Polyamorous people more concerned than monogamous people.

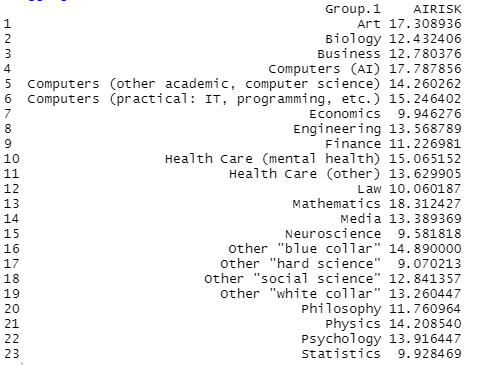

Field (yes): p < .001

Artists, AI researchers, and mathematicians most concerned of all fieldsmen. Economists, neuroscientists, hard scientists, and statisticians are not so concerned.

Political Ideology (yes): p < .001

Conservatives less concerned than others.

Political Spectrum (1 - far left, 10 - far right | yes): p < .01

Far left very concerned, others not so much.

Having different political opinions from 10 years ago (yes): p < .001

Changing opinions predicts differences in general.

“Unhappiness” (derived from mood, anxiety, life satisfaction, romantic satisfaction, job satisfaction, social satisfaction, suicidality | yes): p < .001

r = .08

Having an inner voice (yes): p < .001

Preference for Humanities over STEM (1 - like STEM, 5 - like humanities |yes): p < .001

People who like humanities are less likely to believe in AI risk.

Thinking you had long COVID (yes): p < .01

Trusting the media (yes): p < .001

r = -.101 (more trust ←→ less risk)

BMI (yes): p < .001

Controlling for variables:

All variables were tested to see whether they held water controlling for media trust / unhappiness / BMI / age.

The following did not pass significance after these 4 controls:

Race

Gender

Sex

Having an inner voice

Interest in STEM

IQ

SAT

The following still passed significance after these 4 controls:

Moral Views (theory: Eliezer influence)

Sexual Orientation (theory: confounded by openness and mental illness)

Being poly (theory: confounded by the degree to which you are down the LW rabbit hole)

Profession (theory: interest in AI causes stronger positions on this issue, not sure why mathematicians are so afraid)

Political ideology (theory: leftists more concerned with acceleration, rightists not as much)

Political spectrum (theory: leftists more concerned with acceleration, rightists not as much)

Changing political views (theory: confounded by openness)

Unhappiness (theory: crazy person → crazy beliefs)

Trust in media (theory: people who don’t trust media will take anti-establishment positions)

BMI (theory: covaries with anxiety/status seeking)

Age (theory: AI risk is a new concern, older individuals are not as interested)

Having long COVID (p = .03) (theory: crazy person → crazy beliefs)

I am curious if there is a correlation between taking Adderall regularly and worrying about AI risk.

IA will destroy or at least drastically reduce leftism in entertainment, dubbing processes will be easier and faster also being an onlythot or pornstar will be over with IA generated images realism